Scientists make next generation sequencing look easy, but the process is complex. After all, the technology allows them to understand one of the most complicated puzzles nature has to offer – DNA. One of the most significant challenges researchers face in NGS is bottlenecks.

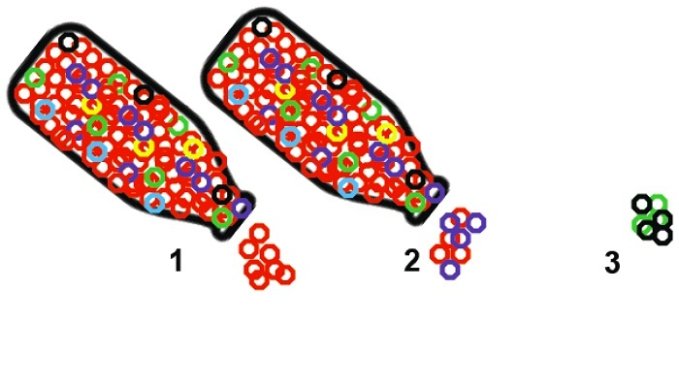

By definition, a bottleneck is a narrowing that impedes flow. In next generation sequencing, bottlenecks come in the form of datasets. For example, one person can have up to 3 million DNA bases. The sequence of those bases determines that person’s physical and biochemical characteristics.

Understanding DNA sequencing allows researchers to explore disease processes at their core. However, it also generates a lot of data that requires analysis. NGS bottlenecks are the result.

Table of Contents

The Basis of Metagenomics

Metagenomics refers to the study of the structure of nucleotide sequences. Nucleotides are what come together to form DNA. If you imagine building blocks, a nucleotide is a block, and DNA is the structure formed by many blocks.

A nucleotide consists of:

- Two of four available DNA chemical bases: adenine, guanine, cytosine, thymine

- A sugar molecule

- A phosphate molecule

NGS allows for shotgun metagenomics. In other words, scientists can sample the genes of all the organisms in the test at one time. While this approach will enable scientists to understand the diversity of an environment, it can create bottlenecks.

What are NGS Bottlenecks?

In NGS, the instrumentation is outpacing informatics. Attempting to sequence DNA at breakneck speeds has its advantages but can also create analysis problems. The faster sequencing technology gets, the more bottlenecks become an issue. The sheer volume and scale of the data coming from sequencing cause challenges in the analysis and storage of the information.

Data organization also leads to bottlenecks. The extent of the data makes it difficult to curate. Without proper curation, it isn’t easy to form and prove a hypothesis about the information.

How Bottlenecks Slow Down Research

Next generation sequencing starts with library preparation. This deciduous process involves the fragmentation of the DNA or RNA strands. Some NGS also requires PCR amplification to increase the sample. If done incorrectly, library preparation can introduce an error into the sample and corrupt any results that come from it. At this stage, the researcher will likely not realize the error exists.

Taking the proper time to do library preparation is the first bottleneck. Rush it, and you may corrupt the entire process. The second potential bottleneck occurs if there is an error. Researchers must spend precious time proving an error exists and determining where it started.

Bottlenecks and Data Storage

Understandably, next generation sequencing creates a large quantity of data. It can be challenging for studies to locate the computational resources necessary to process and store this data. In addition, data storage centers are reaching capacity and trying to find more space. As a result, they constantly run the risk of permanently losing critical data.

What is the answer to NGS bottlenecks? Like all technology, the process continues to evolve. Scientists and technicians are looking to improve the efficiency of the NGS process, as well as improve data management opportunities.